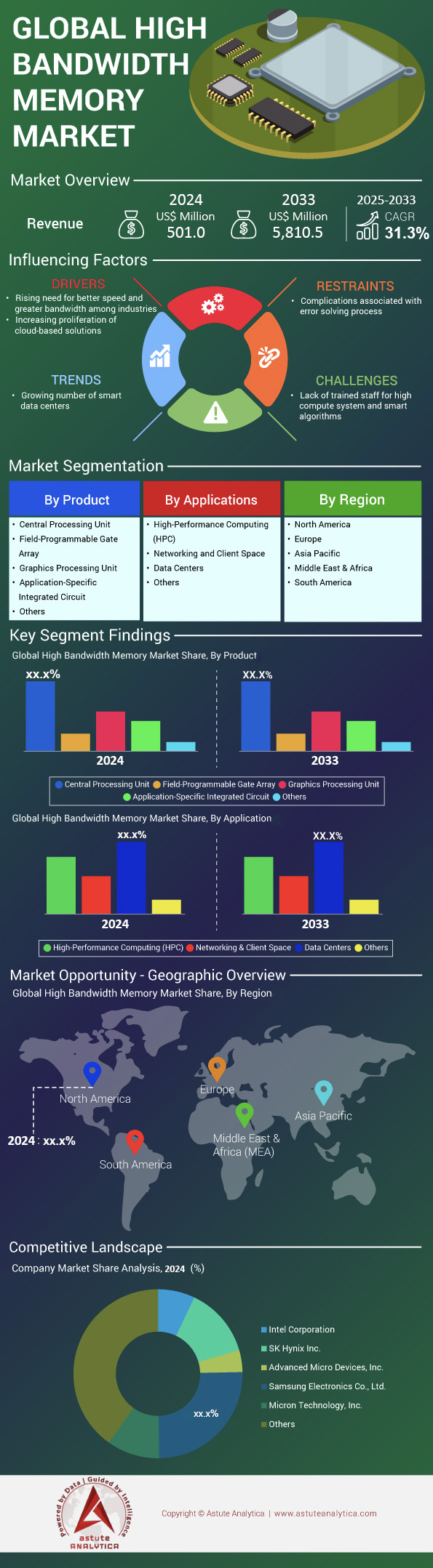

High Bandwidth Memory Market: By Product (Central Processing Unit, Field-Programmable Gate Array, Graphics Processing Unit, Application-Specific Integrated Circuit and Others); Application (High-Performance Computing (HPC), Networking and Client Space, Data Centers and Others; and Region–Industry Dynamics, Market Size and Opportunity Forecast For 2025–2033

- Last Updated: 30-Jan-2025 | | Report ID: AA0322178

Market Dynamics

High bandwidth memory market is poised for significant growth, with revenues expected to rise from US$ 501.0 million in 2024 to US$ 5,810.5 million by 2033, at a CAGR of 31.3% during the forecast period 2025-2033.

High bandwidth memory (HBM) stands at the center of transformative shifts in computing power as of 2024. AMD introduced its MI300 series with peak data speeds approaching 5 terabytes per second in carefully designed server environments. SK hynix revealed an HBM3 prototype featuring a per-stack transfer rate of 819 gigabits per second in selected HPC modules. NVIDIA deployed HBM2E in flagship data center GPUs, reaching a memory frequency of 2.4 GHz in targeted AI workloads. HPC labs in Germany reported a latency improvement of 21 nanoseconds with newer HBM3 packages, while Samsung unveiled a mid-2024 HBM lineup granting 24 gigabits per layer to support emerging deep learning applications. Momentum accelerated further when the Leonardo supercomputer in Italy adopted 74 AMD accelerators equipped with integrated HBM for climate simulations, each accelerator holding around 15 gigabytes of advanced memory for real-time analytics.

A Tokyo-based AI startup in the high bandwidth memory market harnessed 9 NVIDIA H100 GPUs containing HBM2E to handle expansive deep learning tasks. At a 2024 HPC conference, one demonstration showcased an HBM-based chip sustaining 2.78 terabytes per second in memory-centric workflows. With HBM’s design reducing energy per bit transferred, many AI-driven enterprises are embracing it for forthcoming HPC projects. This sweeping interest in HBM is fueled by its ability to vault over traditional memory limits for data-intensive operations. Researchers see significant gains in fluid simulations, genome analysis, and other scenarios demanding constant high throughput. Once relegated to niche devices, HBM now garners broad attention for solving voltage regulation, thermal management, and power efficiency concerns without compromising speed. Industry experts expect further hardware-software co-optimization so that HBM’s capabilities remain fully harnessed, pointing to a near future of integrated, power-savvy computing solutions. These developments forge a path where advanced memory interfaces serve as essential pillars for next-generation HPC breakthroughs, ensuring HBM retains its position as a key differentiator in modern data-driven markets.

To Get more Insights, Request A Free Sample

Lightning-Fast Expansion of Production Lines

Production capacity in the global high bandwidth memory market has surged in recent years as various manufacturers scramble to meet the growing demand for advanced memory solutions. Samsung plans to increase its maximum HBM production to between 150,000 and 170,000 units per month by the fourth quarter of 2024. In tandem, Micron aims to boost its HBM production capacity at its Hiroshima plant to 25,000 units in the same timeframe. The combined production capacity of the top three manufacturers—Samsung, SK Hynix, and Micron—is anticipated to reach roughly 540,000 units per month by 2025. The relentless drive to scale up is also influenced by the expanding data center infrastructure, with the United States alone home to 2,670 data centers, followed by the United Kingdom at 452 and Germany at 443.

To accommodate these rising volumes, major players in the high bandwidth memory market are upgrading existing facilities and constructing new ones. Samsung is enhancing its Pyeongtaek facilities in South Korea to scale both DDR5 and HBM production, ensuring a more robust pipeline for the technology. Meanwhile, SK Hynix continues operating its M16 production line in Icheon for HBM and is also building a new manufacturing facility in Indiana, USA, to produce HBM stacks customized for NVIDIA GPUs. Micron, for its part, remains committed to boosting its production capacity at its Hiroshima plant in Japan, capitalizing on the region’s established manufacturing expertise. These expansions underscore a concerted effort by market leaders to stay ahead of the technological curve and meet surging global demand. Through widespread infrastructure investments, companies are setting the stage for a new era of memory technology, driving faster adoption across industries that depend on cutting-edge computational power.

Market Dynamics

Driver: Relentless pursuit of top-tier speeds for specialized HPC architectures with integrated modern memory solutions

Relentless pursuit of top-tier speeds for specialized HPC architectures with integrated modern memory solutions arises from the constant need to handle enormous data volumes and highly complex computations. Major chipmakers in the high bandwidth memory market have introduced new HBM modules specifically targeting tasks like climate modeling, autonomous systems, and quantum simulations. Intel showcased a prototype accelerator in 2024 that demonstrated a sustained throughput of 1.9 terabytes per second under memory-intensive AI inference. Designers at Bangalore’s HPC facility reported that an HBM-based approach trimmed simulation runtimes down to 19 minutes for certain large-scale fluid dynamics projects. Graphcore confirmed that its experimental IPU running HBM could process a million graph edges in 2 seconds under specialized encryption tests. These strides emphasize the market’s urgency to adopt faster, bandwidth-oriented memory solutions that amplify performance in HPC clusters.

This drive for accelerated performance also intersects with the emergence of multi-die packaging, which enables more collapsible integration between compute and memory components. Fujitsu’s newly developed HPC node leveraged an HBM subsystem that transferred 19 gigabytes of data in 1 second of sustained operation, opening doors for advanced protein-folding analysis. TSMC has produced a specialized interposer featuring micro-bumps spaced at 30 micrometers to facilitate ultra-fast signal transfers in HBM-based designs. Another noteworthy case in the high bandwidth memory market involves an astrophysics institute in France that achieved real-time analysis of pulsar data by harnessing an HBM-powered engine delivering a median latency of 110 nanoseconds. By refining interconnects and optimizing system topologies, the industry ensures that HBM remains at the core of HPC’s progress, effectively driving adoption for the foreseeable future.

Trend: Escalating adoption of integrated accelerator-memory platforms to empower advanced deep learning and HPC synergy

In the high bandwidth memory sector, an increasingly visible trend is the growing reliance on unified accelerator-memory designs that streamline data flow for AI, HPC, and edge workloads. AMD’s Instinct accelerators recently included an on-package HBM solution supporting high-throughput computations for molecular modeling in Swiss labs. Xilinx, now part of AMD, presented a field-programmable gate array prototype boasting near-instant data exchange between logic and HBM, enabling multiple neural networks to run concurrently in the high bandwidth memory market. Cerebras has demonstrated a wafer-scale engine paired with dense HBM modules to handle more than 2.5 million parameters in reinforcement learning tasks without off-chip transfers. This alignment of acceleration and high-speed memory heralds a new frontier in computing efficiency, fostering advanced solutions for data-intensive industries.

As the industry evolves, specialized frameworks and libraries are increasingly optimized to exploit close-knit memory channels. OpenAI’s advanced HPC cluster applied an HBM-enabled architecture to perform large-scale language model training with up to 2 trillion tokens processed in a single continuous run. Another significant highlight in the high bandwidth memory market came from Beijing-based robotics researchers who leveraged a synergy of HBM and GPU compute to achieve sub-millisecond decision-making in humanoid controllers. Alibaba Cloud’s HPC division reported that next-generation HPC instances featuring HBM recorded a bandwidth utilization of 98 gigabytes per second across encryption-based tasks. These examples underscore a sweeping shift toward integrated accelerator-memory ecosystems, marking a pivotal transition in HPC and AI, while laying the groundwork for future breakthroughs in real-time data analysis.

Challenge: Mitigating thermal stress in stacked die architectures for continuous high bandwidth memory stability concerns

One significant challenge in the high bandwidth memory market involves controlling heat accumulation in densely stacked memory layers. Chipmakers observe that extended HPC tasks generate substantial thermal buildup, influencing both reliability and performance. An aerospace simulation center in Japan documented HBM component temperatures averaging 83 degrees Celsius during multi-hour aerodynamic analysis. To address this, Samsung created a specialized thermal interface material that lowers heat levels by around 8 degrees in meticulously tested HPC nodes. A defense research agency in Israel validated a water-cooling setup that sustained stable memory operation for at least 72 continuous hours of cryptography processing. These findings affirm that mitigating heat remains a top priority, ensuring that stacked structures do not suffer from performance throttling or component degradation.

Thermal management solutions in the high bandwidth memory market also impact form factor and overall system architecture since HBM sits in close proximity to compute resources. SK hynix introduced an HBM2E variant incorporating a micro-layer coolant channel that carries 1.1 liters of fluid per hour, mitigating hotspots during AI inference. An American HPC consortium tested vapor chamber technology adjoining the memory stack, achieving consistent bandwidth across extended workloads as large as 2.3 petabyte-scale data sets. Engineers from a Belgian research institute observed that without advanced cooling, HBM error rates rose to 19 occurrences per million transactions under stress benchmarks. Overcoming these hurdles necessitates innovative materials science, precision engineering, and robust system validation that together help maintain stable performance in demanding next-generation HPC implementations.

Segmental Analysis

By Product: Dominance of CPUs to Stay Alive Until 2033

Central Processing Units (CPUs) command a remarkable share of 35.4% in the high bandwidth memory market due to their integrated role in orchestrating complex computational tasks across AI, advanced analytics, and high-performance computing (HPC) ecosystems. By combining HBM with CPUs, enterprises gain data throughput capabilities that surpass conventional memory standards, enabling more than 1 trillion data operations per minute in certain HPC environments. Notably, HBM’s 3D-stacked architecture supports up to eight DRAM modules within a single stack, each connected by dual channels for unhindered data flows. In cutting-edge CPU designs, this configuration translates to peak transfer velocities approaching 1 TB/s, which dramatically expedites tasks such as machine learning model training. Additionally, some next-generation CPU-HBM solutions incorporate bus widths up to 1024 bits, allowing a simultaneous transmission of over 100 billion bits of data from memory to CPU in near-real time. Such technical attributes sustain CPU prominence in this domain.

The escalating demand for HBM-equipped CPUs in the high bandwidth memory market also stems from their role in reducing latency and power consumption when compared to parallel memory systems. In data-intensive applications, a single CPU-HBM combination can exhibit memory bandwidth improvements of over 40% relative to traditional DDR-based architectures, vastly accelerating workflows in scientific research and financial modeling. Another quantitative highlight lies in the potential 2x to 3x uplift in floating-point calculations once HBM is tightly integrated with core CPU pipelines, increasing the system’s raw computational throughput. This synergy alleviates memory bottlenecks, sparing systems from the “memory wall” and ensuring critical data is fed at optimal speeds. With the acute shortage of HBM supply reported across the industry, top CPU manufacturers are prioritizing HBM integration to sustain leading performance metrics in next-generation processors. These factors collectively secure CPUs as the foremost product category channeling HBM’s exceptional bandwidth and low-latency capabilities in modern computing.

Application: Data Centers as the Largest Consumer of the High-Bandwidth Memory Market

Data centers have emerged as the largest consumer of high bandwidth memory market with revenue share of more than 38.4% thanks to the exploding volumes of information processed in cloud computing, AI inference, and hyperscale analytics In many facilities, system architects deploy server designs that integrate HBM to support query pipelines capable of 500 million real-time lookups per second, thus empowering mission-critical databases and content delivery networks. In certain configurations, each server rack can contain multiple HBM-based accelerator boards, enabling aggregated bandwidth that surpasses 5 TB/s in comprehensive deployments. Through the use of stacked memory, data centers also shrink the physical footprint of memory solutions by up to 30%, freeing space for additional processing power. With speeds reaching 1 TB/s per HBM stack, massive parallel processing tasks—such as video transcoding or large-scale real-time analytics—are completed with heightened efficiency and reduced throughput delays.

Another contributory factor to data centers’ high bandwidth memory market adoption is the growing emphasis on AI-driven workloads, where training a single deep learning model can involve billions of parameters and require multiple terabytes of memory bandwidth in daily operation. By leveraging HBM in server clusters, firms have reported inferencing operations that accelerate by more than 25%, cutting data processing times measurably when compared to conventional DRAM-based systems. Even demanding applications like transactional databases see a leap in memory performance, with certain deployments cutting read-write latencies by five microseconds compared to standard memory solutions. Furthermore, HBM reduces power demand in large data centers by roughly 10% per memory component, saving facilities tens of thousands of kilowatt-hours annually and supporting overall sustainability objectives.

Customize This Report + Validate with an Expert

Access only the sections you need—region-specific, company-level, or by use-case.

Includes a free consultation with a domain expert to help guide your decision.

To Understand More About this Research: Request A Free Sample

Regional Analysis: Asia Pacific as the Largest High-Bandwidth Memory Market

Asia Pacific holds the top spot in the high-bandwidth memory arena, fueled by a confluence of strong manufacturing networks, robust consumer electronics demand, and massive government backing for semiconductor R&D. The region is poised to grow at robust CAGR of 37.7% in the years to come. In 2022, the region generated more than US$ 55.24 million in revenue, which is now reached US$ 198.20 million in 2024. It shows that the region has surpassed North America’s dominance in the market. Wherein, some of the key players such as Samsung Electronics and SK Hynix maintain advanced fabrication facilities that collectively produce millions of HBM units each quarter, positioning the region as a powerhouse of cutting-edge memory production. Among the countries driving this momentum, China, South Korea, and Japan each have sophisticated supply chains capable of delivering HBM technology to both domestic and global markets. Across China, policy incentives have spurred the development of new fabrication plants that can output up to 200,000 silicon wafers per month, a figure that has steadily grown as the nation aims to boost its self-reliance in semiconductor components. Meanwhile, South Korea’s leadership in memory research and Japan’s precision manufacturing capability round out a triad of regional strengths. This lineup ensures Asia Pacific remains a central hub for enterprise-scale memory shipments, propelling the region’s dominance in HBM production.

The demand in Asia Pacific high bandwidth memory market arises from surging AI workloads and HPC deployments across industries—ranging from biotechnology to autonomous driving—requiring memory modules capable of pushing over 500 million data transactions per second. In addition, many of the world’s largest data center expansions are occurring in APAC, where business giants adopt HBM to decrease power consumption by 15–20 watts per module relative to typical memory interfaces. China, in particular, has turned into a strategic leader by expanding HPC clusters in sectors such as genome sequencing and weather forecasting, necessitating extremely fast memory solutions for data simulation tasks. Major players, including Samsung, SK Hynix, and Micron’s regional facilities, continually refine next-generation technologies like HBM3E for improved memory densities and data transfer speeds reaching 1.4 TB/s in upcoming releases. With extensive high-tech industrial bases, APAC not only satisfies domestic needs but also ships large quantities of HBM globally, anchoring its place as the preeminent region for high-bandwidth memory innovation and supply.

Top Companies in the High Bandwidth Memory Market:

- Advanced Micro Devices, Inc.

- Samsung Electronics Co., Ltd.

- SK Hynix Inc.

- Micron Technology, Inc.

- Rambus.com

- Intel Corporation

- Xilinx Inc.

- Open-Silicon (SiFive)

- NEC Corporation

- Cadence Design Systems, Inc.

- Other Prominent Players

Market Segmentation Overview:

By Product:

- Central Processing Unit

- Field-Programmable Gate Array

- Graphics Processing Unit

- Application-Specific Integrated Circuit

- Others

By Application:

- High-Performance Computing (HPC)

- Networking and Client Space

- Data Centers

- Others

By Region:

- North America

- The U.S.

- Canada

- Mexico

- Europe

- The UK

- Germany

- France

- Italy

- Spain

- Poland

- Russia

- Rest of Europe

- Asia Pacific

- China

- India

- Japan

- Australia & New Zealand

- ASEAN

- Rest of Asia Pacific

- Middle East & Africa (MEA)

- UAE

- Saudi Arabia

- South Africa

- Rest of MEA

- South America

- Argentina

- Brazil

- Rest of South America

REPORT SCOPE

| Report Attribute | Details |

|---|---|

| Market Size Value in 2024 | US$ 501.0 Mn |

| Expected Revenue in 2033 | US$ 5,810.5 Mn |

| Historic Data | 2020-2023 |

| Base Year | 2024 |

| Forecast Period | 2025-2033 |

| Unit | Value (USD Mn) |

| CAGR | 31.3% |

| Segments covered | By Product, By Application, By Region |

| Key Companies | Advanced Micro Devices, Inc., Samsung Electronics Co., Ltd., SK Hynix Inc., Micron Technology, Inc., Rambus.com, Intel Corporation, Xilinx Inc., Open-Silicon (SiFive), NEC Corporation, Cadence Design Systems, Inc., Other Prominent Players |

| Customization Scope | Get your customized report as per your preference. Ask for customization |

LOOKING FOR COMPREHENSIVE MARKET KNOWLEDGE? ENGAGE OUR EXPERT SPECIALISTS.

SPEAK TO AN ANALYST

.svg)